How I Backup (2021 Edition)

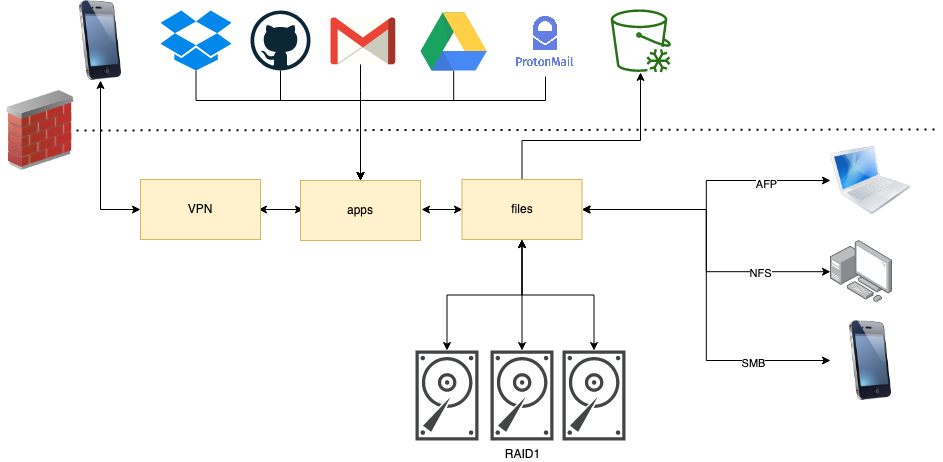

Experiencing any sort of data loss makes you paranoid about backing up. Between hard drives dying, rm -rf'ing directories, and closing accounts without exporting, I've had my share of data loss events. Because of that, I am always examining my file backup solution and attempting to be as comprehensive and redundant as possible. (Within reason!) In the past I've leaned more on homegrown solutions for backing up my cloud accounts in general, but this year I've been attempting to use more existing tools along with trying to move most of my mass file storage out of the cloud as a primary access point. Here's a look at that layout.

At my house, I run a small fleet of Raspberry Pi's (mostly 4's) to run a number of services. First, I have one Pi attached to a RAID1 array setup to act as a local file server. Devices on my home network (managed by OpenWRT) can access the file server over SMB, AFP, and NFS. That file server holds about 1TB of data and gets backed up every 24 hours to AWS Glacier. I store most project and personal files on this file server rather than any one of my local machines.

In addition, I have a Raspberry Pi running a docker-compose stack containing a few different applications. It hosts some side-project work, and it backs up data for those services to the file server. (Which then also get backed up to AWS Glacier.) More specific to this topic, this docker-compose stack runs a set of containers that regularly download files from various cloud services and backs up the data to the file server:

Rclone: #

Think of rclone as rsync for the cloud! I have one container running with rsync installed that syncs-down files from my Google Drive and Dropbox accounts every 24 hours. A cool feature of rclone is that for Google Drive it automatically converts Google formats into their counterpart file formats. (ie Google Docs -> Word, Google Scheets > Excel, etc)

GitHub: #

This is still more of a homegrown solution, but it works. For this service I have a container use the GitHub CLI to grab a list of all repos I own, and then a BASH script iterates through that list and syncs the Git repo. This also runs every 24 hours.

OfflineIMAP + Proton Bridge #

Often overlooked, but incredibly important, I backup emails too. For that I use a tool called OfflineIMAP that syncs down any IMAP mailbox to a local Maildir backup. (You can actually use a tool called Mutt to use this local Maildir like an email client.) In addition, I use Proton Bridge running privately in this container to tunnel a connection to ProtonMail's servers. This also runs every 24 hours.

Lastpass #

Lastly I back up my Lastpass account to a CSV using their CLI every 24 hours.

A cool thing to note with this setup is the amount of open source tools it uses by these various cloud providers. While I need to use a third-party solution (Rclone) for Dropbox and Google Drive, all of the other services utilize open source tools maintained by those services, which has made it easy to set all of this up on an ARM-based system like Raspberry Pi.

Finally, you can reference my docker-compose stack I use (with private info scrubbed) to set up your own backup solution: https://github.com/johnjones4/apps.local.johnjonesfour.com.